Datacenter scale computing

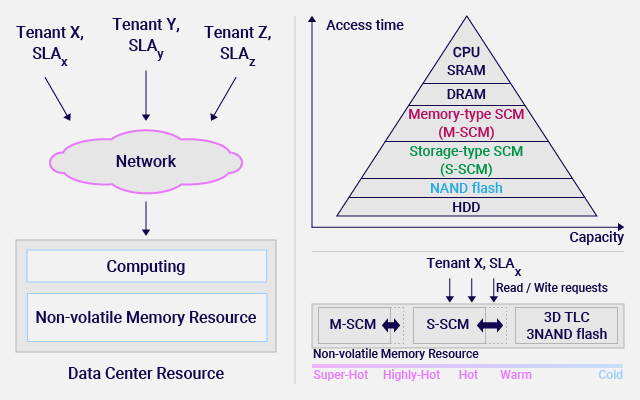

The amount of data processed in data centers is increasing with the progress of real-time data processing using AI for IoT, etc. Conventionally, data processing is performed on a rack scale. In the future, the high-speed non-volatile memory (storage class memory, SCM) and the high-speed interconnection will resolve the bottleneck of storage and interconnection. As a result, huge amounts of data can be handled in an integrated manner across data centers of several hundred meters. We are working on Datacenter scale computing by pooling enormous computation and storage resources such as CPU, GPU, DRAM, SCM, and Flash memory, and by reconfiguring the optimal hardware according to application characteristics. For example, Super-Hot data with high access frequency are stored in high-speed and small-capacity memory-type SCM (M-SCM), Hot data with high access frequency are stored in medium-speed and medium-capacity storage-type SCM (S-SCM) and Cold data that are not accessed frequently are stored in Flash memory.