Computation in memory

Computation in memory (CiM) with non-volatile memories executes deep learning inference and learning with extremely high speed and low power.

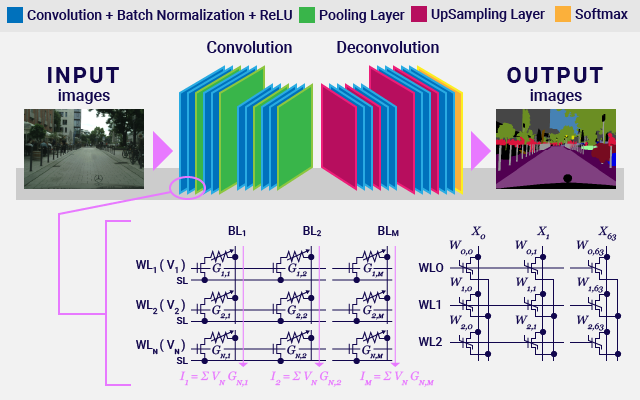

Autonomous driving application performs a huge number of multiply-accumulation (MAC) for image and object recognition. Takeuchi Lab conducts research on CiM suitable for deep learning. Non-volatile memories such as Resistive RAM (ReRAM) and Ferroelectric FET (FeFET) store weights of neural networks. Inference of deep learning is executed at high speed by driving non-volatile memory cell arrays simultaneously in massively parallel.