Transformer

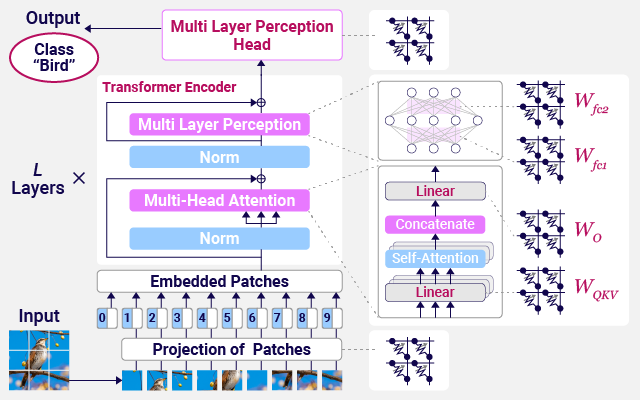

Transformer model uses the attention mechanism to train and perform natural language processing and image classification. Transformer requires different operations than Convolutional Neural Networks (CNN), which have convolutional layers or Recurrent Neural Networks (RNN), which are good at processing time series data. Attention mechanism trains “attention” to important data in the past. In the era of lightweight transformer models and edge computing, different types of Computation-in-Memory (CiM) are used according to the computing characteristics in Transformer. Transformer operates with high energy efficiency by using SRAM CiM for the attention layer, which requires reprogramming, and ReRAM CiM for the linear layer, which requires data retention.