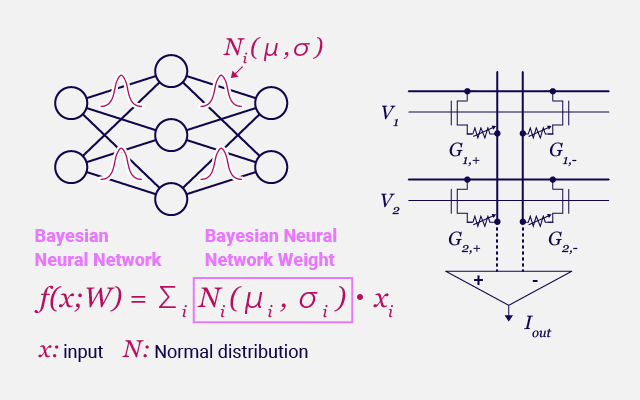

Bayesian neural network

Utilizing ReRAM variability in Bayesian neural networks, which treats weights as probability distributions, enables low-power and high-accuracy inference.

Bayesian neural network (BNN) quantifies the uncertainty of inference accuracy by treating weights as probability distributions. The variation of ReRAM used in Computation-in-Memory is leveraged to naturally reproduce the weight variability for BNN. The mean and standard deviation of the weights can be controlled using two ReRAM cells in a differential pair, allowing representation of the trained BNN weights. This approach eliminates the need for random number generation circuits and enables low-power, high-accuracy inference.