Adversarial training

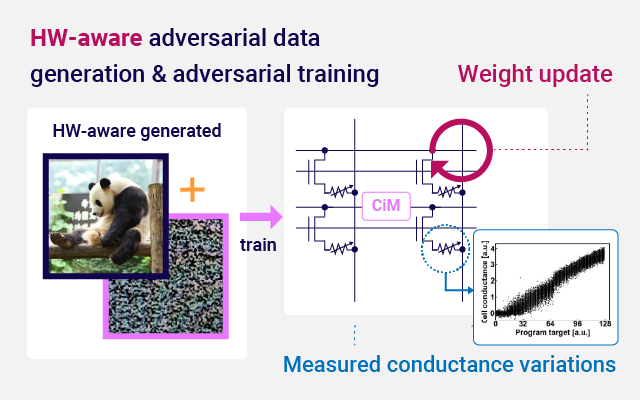

Adversarial training exploits computational errors due to device variation, a non-ideality of Computation-in-Memory, to increase the robustness against adversarial attacks aimed at malfunctioning neural network models.

Adversarial training is a technique that increases the robustness of neural network models against adversarial attacks. By using adversarial samples during training, accurate predictions can be made against perturbations that would intentionally change the model output. Computation errors caused by non-idealities of the Computation-in-Memory architecture, such as memory write variations, can improve robustness to adversarial attacks without the need for additional hardware. Furthermore, by injecting Computation-in-Memory variation in the neural network weights, adversarial trained models maintain high inference accuracy.